Post Index

Technical Discussion | Analytical Calendar The Story | The Index Page

2023-09-08

Analytical Calendar

How to Use And the Reasons

Analytical Calendar is extremely useful to be used in interactive dashboard. It is, in fact, having all the core components when manipulating date and calendar but it is re-designed to satisfy a more simplified way to benefit both the analysts and developers.

On the front-end, because of the simplicity to have just analysis perspective and comparison perspective, the presentation can be more consistent. Instead of providing different charts for different date granularity, a single chart can already presented different period with different date granularity. More details will be shared later in this article.

On the back-end, because now everything is already pre-calculated in the data model, it does not require complicated expression to be developed. It greatly saves the effort for the developer. More details will be shared later in this article.

In short, this article focuses on the demonstration of the analytical calendar to share the benefits of it. And it also covers a bit on how it helps the analysts on selecting the date period with a few selections and how it helps developers to reduce the time and effort in design and development.

Let's start.

* this analytical calendar is a more enhanced version from my previous posts.

Two examples are shared in Github:

It is date level with complete week example and also with custom.

https://github.com/kongson-cheung/analytical-calendar/blob/main/Qlik%20Sense%20Analytical%20Calendar%20Examples/Analytical%20Calendar%205.0_Complete_Week_With_Custom.qvf

It is month level example.

https://github.com/kongson-cheung/analytical-calendar/blob/main/Qlik%20Sense%20Analytical%20Calendar%20Examples/Analytical%20Calendar%205.0_Month_Level.qvf

Analytical Calendar

Analytical calendar is a composite of tables associated together to provide time intelligent experience on usage and development. It is a generic design that can be applied in different kinds of interactive dashboard. The below will share the core component to let you understand how it can be used. There are four main components required user selection including:

- Date Perspective

- Unique Date

- Analysis Perspective

- Comparison Perspective

Only selecting these 4 elements, it is allowed to perform time intelligent date range selection.

Date Perspective

It is uncommon that analysis is performing in different granularity levels. Even if it is possible, it has a lot of assumptions to make it happen. Defining a proper date granularity, it makes the overall analysis consistent and easier. It named as Date Perspective to make it easier to understand by general users.

Date Perspective consists of only five values including Year, Quarter, Month, Week and Date. It is to ensure the proper date granularity of the overall analysis and more technically, it is controlling the corresponding selection to comply with the integrity. For example,

if Year is selected,

Unique Date: 2023, 2022, 2021, etc.

Analysis Perspective: Actual, Rolling 2 Years, etc.

Comparison Perspective: 1 Year before, 2 Year before, etc.

if Quarter is selected,

Unique Date: 2023-Q3, 2023-Q2, etc.

Analysis Perspective: Actual, YTQ, Rolling 2 Quarters, etc.

Comparison Perspective: 1 Year before, 1 Quarter before, etc.

if Month is selected,

Unique Date: 2023-Sep, 2023-Aug, etc.

Analysis Perspective: Actual, YTM, Rolling 2 Months, etc.

Comparison Perspective: 1 Year before, 1 Quarter before, 1 Month before, etc.

if Week is selected,

Unique Date: 2023-W41, 2023-W40, etc.

Analysis Perspective: Actual, YTW, Rolling 2 Weeks, etc

Comparison Perspective: 1 Year before, 1 Week before, etc.

if Date is selected,

Unique Date: 2023-09-09, 2023-09-08, etc.

Analysis Perspective: Actual, YTD, MYD, Rolling 2 Days, etc.

Comparison Perspective: 1 Year before, 1 Quarter before, 1 Month before, 1 Week before, 1 Day before, etc.

In any analysis, it is important to first define the date "perspective" for the analysis. It avoids the chance to lose focus when manipulating the date components compared to the traditional design.

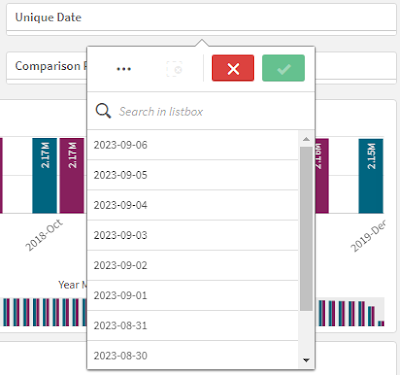

Unique Date

Unique Date is to further make clear the overall analysis in selecting the exact date representation in the specific date granularity.

In traditional design, it requires to first select Year then Quarter or Month then to Day. For developer, it is a disaster to determine the date granularity based on the selection. A lot of rule-based calculation is required to be defined at the expression to manipulate the selection. And because of this, this increases the chance to make multiple chart with the sake for different date level presentation.

For general users, it generates confusion, especially, in changing the selection or the view for the analysis. It needs first to remove the selection and then to apply the new selection. However, Year, Quarter, Month, Week, Day are conflicting with each other, e.g. if you select a month, you should not use week or vice versa. If the selection is overridden, there is no mechanism to alleviate the proper selection. All are just based on the human intelligence to rectify the date component selection.

Although for advanced users, it is definitely not a problem. The analytical calendar design still hopes to alleviate this issue by making use of the unique date.

Unique date is a union of all the date representations by granularity of Year, Quarter, Month, Week and Date.

For example,

Year: 2023, 2022, 2021, etc.

Quarter: 2023-Q3, 2023-Q2, etc.

Month: 2023-Sep, 2023-Aug, etc.

Week: 2023-W41, 2023-W40, etc.

Date: 2023-09-09, 2023-09-08, etc.

In this design, it just requires to select the exact date representation directly. There is no human interpretation and human computation required for the correct and accurate selection. In short, what you select is what you get.

Analysis Perspective

Analysis, in general, is always to deal a period. A period means a start date and an end date in the same granularity level. There are plenty kinds of representation available (to review), it looks the combination of a date plus the range type is more commonly used. For example, 2023-09-09 YTD, 2023-06 Rolling 6 Months, etc, i.e. a unique date plus the date range type. To be more precise, there is a date range type called Actual. It represents itself.

Analysis perspective also follows strictly on the date integrity. For example, Unique Date=2023, it does not allow you to select YTD, YTM, Rolling 5 months, etc. Only if the valid combinations fallen into the same date granularity, it allows the value for selection. In a lot of traditional design, this is not doing very well.

Comparison PerspectiveComparison is another important element in analysis. Instead of just looking at the current performance, it also very common to compare the historical performance to see whether it is good or bad.

The most common way to represent comparison is last year, last month, or a specific date. To make it more generic, it becomes last X Year, last X month or a custom date. Custom date means any unique date. It can be Year, Quarter, Month, Week or Date.

In the implementation, it represents X Years before, X Quarters before, X Months before, X Weeks before and X Days before. For custom date, it is a list of date representation similar to the Unique date. Similarly, there is date integrity applied. For example, if the 2023 Actual, it would not allow the selection of X Months before, X Weeks before because they are not in the valid granularity level.

Front-end Design Improvement

With the capability of the Analytical Calendar, the front-end now can be more neat and clean. The reason is because there is only two perspectives for periods, i.e. Analysis period and Comparison period.

Analysis period is the period for analysis. It is similar to the traditional design to select year, quarter, month, week and day. Comparison period is the period for comparison. In traditional design it is usually incorporated into the chart and each chart will represent a kind of comparison.

By making use of the Analytical Calendar, all charts now can be possible to provide either or both analysis and comparison period. There is no need to replicate the same chart to have each to show individual Year, Month or Week, etc for the KPI and the comparison. All charts are on-the-fly to provide the information based on the user selection.

In other words, it provide a coherent view in terms of usage and experience. To further let the analysts understand what is being analyzed, on the top left corner, there is a textbox showing both the selection details as well as the actual date range selected. In this way, it is crystal clear on what analysis is being performed and what comparison is being performed across from the selection to all the charts being shown in the sheet.

In other words, it provide a coherent view in terms of usage and experience. To further let the analysts understand what is being analyzed, on the top left corner, there is a textbox showing both the selection details as well as the actual date range selected. In this way, it is crystal clear on what analysis is being performed and what comparison is being performed across from the selection to all the charts being shown in the sheet.

Back-end Design Improvement

In order to use the Analytical Calendar, it requires only two variables named vSetAnalysisAnalysisCalendar and vSetAnalysisComparisonCalendar. The former is used for filtering the Analysis Perspective period while the latter is used for filtering the Comparison Perspective period.

The variable vSetAnalysisAnalysisCalendar is very simple as illustrated below. It just requires to set the %CONTROL_PERSPECTIVE equals to ANALYSIS and make sure there is no Comparison Perspective.

Similarly, the variable vSetAnalysisComparisonCalendar is also very simple as illustrated below. It just requires to ignore the %CONTROL_PERSPECTIVE equals to ANALYSIS.

With these two variables, all the expression in the chart development is straightforward. It just needs to apply the set analysis for the two variables.

When requiring Analysis Perspective, the below set analysis can be used.

When requiring Comparison Perspective, the below set analysis can be used.

As the front-end is now consistent and coherent, the same expression can be applied and across all the charts. Unless, there are specific chart would be shown, these two kinds of expressions can satisfy a majority of the use cases. Definitely, the Analytical Calendar can also be applied to more advanced use scenarios which would not cover here.

Other Advantages

Indeed, the Analytical Calendar also has other advantages. In designing the data model, majority of the time would fall into a situation to pre-build a data or to calculate on the fly. The rule of thumb is always to pre-build it if possible to minimize the computation required during use.

When manipulating date, there are also a few scenarios that might not be generic for every usage. For example, if today is 2023-09-09, should it allow the selection of 2023-Sep? In my experience, it depends on the flavor of the end users but this becomes a question of developers for a proper expression development. Another example is the cross year week. There are different ways to handle the incomplete week like making it full week and count as the previous year week or the let the week to be incomplete. This is a headache not just to developer but also the analysts using the interactive dashboards.

Thus, the design of Analytical Calendar also incorporates this element. It allows to pre-calculate all these into the data model and do not need very complicated on-the-fly expression to calculate the results. It can still maintain as Analysis Perspective and Comparison Perspective. The below are some scenarios for sharing.

This concludes how to use the Analytical Calendar. Please leave me message if further discussion is needed.

Thank you for reading. I hope it help you. Just leave a message if you have any discussion/share want to make.

* If you like my post, support me buy me coffee https://buymeacoffee.com/kongsoncheung.