2023-07-19

Qlik Sense Server Migration Overview

Qlik Sense Server Migration

API and CURL Detail

Migrating Qlik Sense items using APIs

There are a number of QS items required to be migrated in the Qlik Sense server migration process. This article focuses two core components to illustrate the concept and steps required for migration. First, it will discuss about the App migration that involves App, App Object and App Content. Then it will discuss about the Reload Task that involves Reload Task, System Event and Composite Event.

For other, the concept is similar. I am happy to help if you have questions.

General QRS API Call

More technically, Qlik Sense Server Migration involves a few types of QRS API calls:

There are a number of QS items required to be migrated in the Qlik Sense server migration process. This article focuses two core components to illustrate the concept and steps required for migration. First, it will discuss about the App migration that involves App, App Object and App Content. Then it will discuss about the Reload Task that involves Reload Task, System Event and Composite Event.

For other, the concept is similar. I am happy to help if you have questions.

General QRS API Call

More technically, Qlik Sense Server Migration involves a few types of QRS API calls:

1) GET

To obtain the metadata in JSON and this JSON will be modified or trimmed off in order to POST/PUT into the new cloud server.

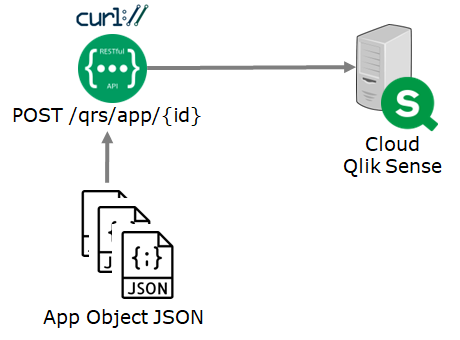

2) POST

POST command is generally to create a new item in the QS server.

3) PUT

PUT command is usually to update the QS item that is already created in the server.

For POST and PUT, usually, it requires details to be provided in the HTTP body. It would be much easier to GET the metadata directly from the on-premises server and modify the necessary component in the JSON for the POST and PUT APIs. One obvious example is the modified date and it must be larger than the last modified date. More related to this article discussion is the app ID. Since APP ID will be generated after upload, it should maintain a mapping in order to update the JSON for the POST and PUT APIs.

App Object ID vs Engine Object ID

These two IDs are tricky and easy to get confused. When the app object is created, a unique GUID will be created and this ID is used internally in QS for QMC. At the same time when the object is created, the Engine Object ID is also created. It is an in-app unique ID to distinguish the object. It is for the qvf used.

In the QRS API, app object ID is referring to the App Object ID. However, in QMC or in the link, it is showing the Engine Object ID.

1) GET

To obtain the metadata in JSON and this JSON will be modified or trimmed off in order to POST/PUT into the new cloud server.

2) POST

POST command is generally to create a new item in the QS server.

3) PUT

PUT command is usually to update the QS item that is already created in the server.

For POST and PUT, usually, it requires details to be provided in the HTTP body. It would be much easier to GET the metadata directly from the on-premises server and modify the necessary component in the JSON for the POST and PUT APIs. One obvious example is the modified date and it must be larger than the last modified date. More related to this article discussion is the app ID. Since APP ID will be generated after upload, it should maintain a mapping in order to update the JSON for the POST and PUT APIs.

App Object ID vs Engine Object ID

These two IDs are tricky and easy to get confused. When the app object is created, a unique GUID will be created and this ID is used internally in QS for QMC. At the same time when the object is created, the Engine Object ID is also created. It is an in-app unique ID to distinguish the object. It is for the qvf used.

In the QRS API, app object ID is referring to the App Object ID. However, in QMC or in the link, it is showing the Engine Object ID.

QS Application Migration

Before migration QS applications, firstly, some QS items should be migrated beforehand. For instance, data connection, extension, content libraries, stream, etc. This makes sure the application can make use of all these components.

In fact, to migrate an application, it is similar to do what manually do with the following steps:

1) Export the app with data and cover all the base, shared and private app objects.

2) Import the app with data with all the exported app objects and maintain the ownership

3) Publish the app to stream (if necessary)

4) Unapprove the app object

5) Unpublish the app object

6) Change the owner of the app object to maintain all the ownership

* If app is exported without data, the always one selected value setting in the field will be lost. If you are certain that the application does not have this setting, it is recommended to export without data and do the reload afterwards.

Export App

There are three methods that are available to obtain the QS app with all the app objects:

1) using QRS API /qrs/app/exportapps (refer to May 2023 version)

This is only available in or above May 2023 version of Qlik Sense. The export scope should be configured to "all".

2) copy the app directly in the Qlik shared folder, i.e. \\server-name\QlikShare\Apps

This folder contains all the Qlik Sense applications that are in the Qlik Sense server. The files in this folder are named by the QS Application ID without extension. These files are actually a binary qvf.

3) Publish/Approve the sheets and then export the app.

QS Application Migration

Before migration QS applications, firstly, some QS items should be migrated beforehand. For instance, data connection, extension, content libraries, stream, etc. This makes sure the application can make use of all these components.

In fact, to migrate an application, it is similar to do what manually do with the following steps:

1) Export the app with data and cover all the base, shared and private app objects.

2) Import the app with data with all the exported app objects and maintain the ownership

3) Publish the app to stream (if necessary)

4) Unapprove the app object

5) Unpublish the app object

6) Change the owner of the app object to maintain all the ownership

* If app is exported without data, the always one selected value setting in the field will be lost. If you are certain that the application does not have this setting, it is recommended to export without data and do the reload afterwards.

Export App

There are three methods that are available to obtain the QS app with all the app objects:

1) using QRS API /qrs/app/exportapps (refer to May 2023 version)

This is only available in or above May 2023 version of Qlik Sense. The export scope should be configured to "all".

2) copy the app directly in the Qlik shared folder, i.e. \\server-name\QlikShare\Apps

This folder contains all the Qlik Sense applications that are in the Qlik Sense server. The files in this folder are named by the QS Application ID without extension. These files are actually a binary qvf.

3) Publish/Approve the sheets and then export the app.

This method has impact to the end users because the private and community app objects will be published and approved to base app objects. After triggering the export, it can simultaneously unapprove and unpublish the app objects but it still take a while for these operations. If the server is frozen, this method would be a good choice.

This method requires the following QRS API:

1) PUT /qrs/app/object/{id}/publish

To publish app objects into sharing state.

2) POST /qrs/app/object/{id}/approve

To approve app objects into base state.

To create the download link and export the app

4) GET /qrs/download/app/{id}/{exportTicketId}/{fileName}

To download the app based on the download link

5) * if require to restore app object state, POST /qrs/app/object/{id}/unapprove

To unapprove the app objects back to sharing state.

6) * if require to restore app object state, PUT /qrs/app/object/{id}/unpublish

To unpublish the app objects back to private / personal state.

This method has impact to the end users because the private and community app objects will be published and approved to base app objects. After triggering the export, it can simultaneously unapprove and unpublish the app objects but it still take a while for these operations. If the server is frozen, this method would be a good choice.

This method requires the following QRS API:

1) PUT /qrs/app/object/{id}/publish

To publish app objects into sharing state.

2) POST /qrs/app/object/{id}/approve

To approve app objects into base state.

To create the download link and export the app

4) GET /qrs/download/app/{id}/{exportTicketId}/{fileName}

To download the app based on the download link

5) * if require to restore app object state, POST /qrs/app/object/{id}/unapprove

To unapprove the app objects back to sharing state.

6) * if require to restore app object state, PUT /qrs/app/object/{id}/unpublish

To unpublish the app objects back to private / personal state.

Import App

There are two APIs available for importing the QVF into the Qlik Sense server:

This requires the qvf first located in the app folder. C:\ProgramData\Qlik\Sense\App

This method is recommended.

Apparently, manual upload is also possible.

Publish App to Stream

The next step is to publish the app into stream with the QRS API.

Some of the applications might be in the work stream that does not require this step.

Unapprove App and Change Ownership

This step is a bit tricky because we are not using the unapprove API. Instead,

the app object put is used.

As a result, we can update both the unapprove flag as well as the app object ownership in one-go.

Unpublish App

The last step is to unpublish the app object if they are private/personal.

PUT /qrs/app/object/{id}/unpublish

*** Note if binary QVF is used

The binary qvf files obtain all the app objects even it is deleted (reference). So, there should be a steps to remove the redundant objects. This can easily be cross-checked with the app object metadata and do a DELETE /qrs/app/object/{id}.

And there are a few more drawbacks:

The binary qvf files obtain all the app objects even it is deleted (reference). So, there should be a steps to remove the redundant objects. This can easily be cross-checked with the app object metadata and do a DELETE /qrs/app/object/{id}.

i) If the application has referenced to the In-App pictures, the link is broken. One way to fix this is to make sure of the Engine JSON API to replace the on-premises app ID to the cloud app ID.

ii) the app properties are also lost. This needs to be fixed by PUT /qrs/app/{id}. Obviously, it requires a JSON that can be obtained by GET /qrs/app/{id}

iii) the app content is lost. And it requires to POST /qrs/appcontent/{appid}/uploadfile. The content physical files can be found in \\server-name\QlikShare\Static Content\App Content.

QS Reload Task Migration

The reload task migration is less complicated compared to the app migration. The only tricky part is to make sure the reload task is pointing to the updated app ID. And reload task always comes with trigger where

a) Schema event is based on the time schedule.

b) Composite event is used to chain up the app reload.

Overall, the below API calls will be used to obtain the metadata.

The below API call will be used to create the reload task, schema event as well as the compositive event.

Thank you for reading. I hope you find it useful. See you in the next post :)

* If you like my post, support me buy me coffee https://buymeacoffee.com/kongsoncheung.

Import App

There are two APIs available for importing the QVF into the Qlik Sense server:

This requires the qvf first located in the app folder. C:\ProgramData\Qlik\Sense\App

This method is recommended.

Apparently, manual upload is also possible.

Publish App to Stream

The next step is to publish the app into stream with the QRS API.

Some of the applications might be in the work stream that does not require this step.

Unapprove App and Change Ownership

This step is a bit tricky because we are not using the unapprove API. Instead,

the app object put is used.

As a result, we can update both the unapprove flag as well as the app object ownership in one-go.

Unpublish App

The last step is to unpublish the app object if they are private/personal.

PUT /qrs/app/object/{id}/unpublish

*** Note if binary QVF is used

The binary qvf files obtain all the app objects even it is deleted (reference). So, there should be a steps to remove the redundant objects. This can easily be cross-checked with the app object metadata and do a DELETE /qrs/app/object/{id}.

And there are a few more drawbacks:

The binary qvf files obtain all the app objects even it is deleted (reference). So, there should be a steps to remove the redundant objects. This can easily be cross-checked with the app object metadata and do a DELETE /qrs/app/object/{id}.

i) If the application has referenced to the In-App pictures, the link is broken. One way to fix this is to make sure of the Engine JSON API to replace the on-premises app ID to the cloud app ID.

ii) the app properties are also lost. This needs to be fixed by PUT /qrs/app/{id}. Obviously, it requires a JSON that can be obtained by GET /qrs/app/{id}

iii) the app content is lost. And it requires to POST /qrs/appcontent/{appid}/uploadfile. The content physical files can be found in \\server-name\QlikShare\Static Content\App Content.

QS Reload Task Migration

The reload task migration is less complicated compared to the app migration. The only tricky part is to make sure the reload task is pointing to the updated app ID. And reload task always comes with trigger where

a) Schema event is based on the time schedule.

b) Composite event is used to chain up the app reload.

Overall, the below API calls will be used to obtain the metadata.

The below API call will be used to create the reload task, schema event as well as the compositive event.

Thank you for reading. I hope you find it useful. See you in the next post :)

* If you like my post, support me buy me coffee https://buymeacoffee.com/kongsoncheung.